This blog will describe the different feature scaling functions and provide examples to show how they differ from each other.

Min/Max Scaling

The minMaxScale function scales a vector or matrix between a min and max value. By default it will scale between 0 and 1 if min/max values are not provided. Min/Max scaling is useful when comparing time series of different scales in machine learning algorithms such as k-means clustering.

Below is the sample code for scaling a matrix:

let(a=array(20, 30, 40, 50),

b=array(200, 300, 400, 500),

c=matrix(a, b),

d=minMaxScale(c))

The expression above creates two arrays at different scales. The arrays are then added to a matrix and scaled with the minMaxScale function.

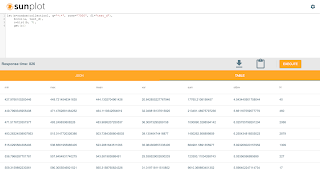

Solr responds with the vectors of the scaled matrix:

{ "result-set": { "docs": [ { "d": [ [ 0, 0.3333333333333333, 0.6666666666666666, 1 ], [ 0, 0.3333333333333333, 0.6666666666666666, 1 ] ] }, { "EOF": true, "RESPONSE_TIME": 0 } ] } }

Notice that once brought into the same scale the vectors are the same.

Standardizing

Standardizing scales a vector so that it has a mean of 0 and a standard deviation of 1. Standardization can be used with machine learning algorithms, such as SVM, that perform better when the data has a normal distribution.

Standardization does lose information about the data if the underlying vectors don't fit a normal distribution. So use standardization with care.

Here is an example of how to standardize a matrix:

let(a=array(20, 30, 40, 50),

b=array(200, 300, 400, 500),

c=matrix(a, b),

d=standardize(c))

Solr responds with the vectors of the standardized matrix:

{ "result-set": { "docs": [ { "d": [ [ -1.161895003862225, -0.3872983346207417, 0.3872983346207417, 1.161895003862225 ], [ -1.1618950038622249, -0.38729833462074165, 0.38729833462074165, 1.1618950038622249 ] ] }, { "EOF": true, "RESPONSE_TIME": 17 } ] } }

Unitizing

Unitizing scales vectors to a magnitude of 1. A vector with a magnitude of 1 is known as a unit vector. Unit vectors are preferred when the vector math deals with vector direction rather than magnitude.

let(a=array(20, 30, 40, 50),

b=array(200, 300, 400, 500),

c=matrix(a, b),

d=unitize(c))

Solr responds with the vectors of the unitized matrix:

{ "result-set": { "docs": [ { "d": [ [ 0.2721655269759087, 0.40824829046386296, 0.5443310539518174, 0.6804138174397716 ], [ 0.2721655269759087, 0.4082482904638631, 0.5443310539518174, 0.6804138174397717 ] ] }, { "EOF": true, "RESPONSE_TIME": 6 } ] } }

Normalized Sum

The final feature scaling function is the normalizeSum function which scales a vector so that it sums to a specific value. By default its scales the vector so that it sums to 1. This technique is useful when you want to convert vectors of raw counts to vectors of probabilities.

Below is the sample code for applying the normalizeSum function:

b=array(200, 300, 400, 500),

c=matrix(a, b),

d=normalizeSum(c))

Solr responds with the vectors scaled to a sum of 1: