Note: If you're not familiar with graph concepts such as nodes and edges it may useful to first review the Wiki on Graph Theory.

This blog is part one of a three part series on making recommendations with Graph Expressions.

The three parts are:

1) Finding products that go well together.

2) Using the crowd to find products that go well with a user.

3) Combining Graph Expressions to make a personalized recommendation.

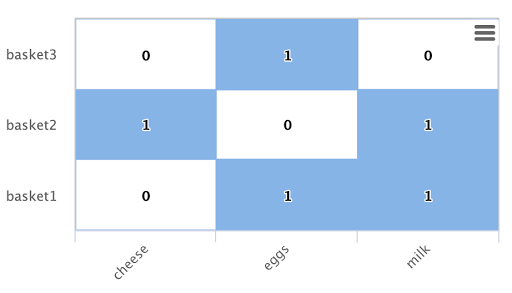

Before diving into the first part of the recommendation lets consider the data. For all three blogs we'll be using a simple SolrCloud Collection called baskets in the following format:

userID basketID productID

user1 basket1 productA

user1 basket1 productA

user1 basket2 productL

user2 basket3 productD

user2 basket3 productM

...

The baskets collection holds all the products that have been added to baskets. Each record has a userID, basketID and productID. We'll be able to use Graph Expressions to mine this data for recommendations.

One more quick note before we get started. One of the main expressions we'll be using is the nodes expression. The nodes expression was originally released as the gatherNodes expression. Starting with Solr 6.4 the nodes function name can be used as a shorthand for gatherNodes. You can still also use gatherNodes if you like, they are both a pointer to the same function.

Now lets get started!

Finding Products That Go Well Together

One approach to recommending products is to start with a product the user has selected and find products that go well with that product.

The Graph Expression below finds the products that go well with productA:

scoreNodes(top(n=25,

sort="count(*) desc",

nodes(baskets,

random(baskets, q="productID:productA", fl="basketID", rows="250"),

walk="basketID->basketID",

gather="productID",

fq="-productID:productA",

count(*))))

Seeding the Graph Expression

The inner random expression is used to seed the Graph Expression:

random(baskets, q="productID:productA", fl="basketID", rows="250")

The random expression is not a Graph Expression. But in this scenario its used to seed a Graph Expression with a set of root nodes to begin the traversal.

The random expression returns a pseudo random set of results that match the query. In this case the random expression is returning 250 basketsIDs that contain the productID productA.

The random expression serves two important purposes in seeding the Graph Expression:

1) It limits the scope of the graph traversal to 250 basketIDs. If we seed the graph traversal with all the basketIDs that have productA, we could potentially have a very large number of baskets to work with. This could cause a slow traversal and memory problems as Graph Expressions are tracked in memory.

2) It adds an element of surprise to the recommendation by providing a different set of baskets each time. This can result in different recommendations because each recommendation is seeded with a different set of basketIDs.

Calculating Market Basket Co-Occurrence with the Nodes Expression

Now lets explore the nodes expression which wraps the random expression. The nodes expression performs a breadth first graph traversal step, gathering nodes and aggregations along the way. For a full explanation of the nodes expression you can review the online documentation.

Lets look at exactly how the example nodes expression operates:

nodes(baskets,

random(baskets, q="productID:productA", fl="basketID", rows="250"),

walk="basketID->basketID",

fq="-productID:productA",

gather="productID",

count(*))

Here is an explanation of the parameters:

- baskets: This is the collection that the nodes expression is gathering data from.

- random expression: Seeds the nodes expression with a set of pseudo random basketIDs that contain productA.

- walk: Walks a relationship in the graph. The basketID->basketID construct tells the nodes expression to take the basketID in the tuples emitted by the random expression and search them against the basketID in the index.

- fq: Is a filter query that filters the results of the walk parameter. In this case it filters out records with productA in the productID field. This stops productA from being a recommendation for itself.

- gather: Specifies what field to collect from the rows that are returned by the walk parameter. In this case it is gathering the productID field.

- count(*): This is a graph aggregation, that counts the occurrences of what was gathered. In this case it counts how many times each productID was gathered.

In plain english this nodes expression is gathering the productIDs that co-occur with productA in baskets, and counting how many times the products co-occur.

Scoring the Nodes To Find the Most Significant Product Relationships

With the output of the nodes expression we already know which products co-occur most frequently with productA. But there is something we don't know yet: how often the products occur across all the baskets. If a product occurs in a large percentage of baskets, then it doesn't have any particular relevance to productA.

This is where the scoreNodes function does it's magic.

scoreNodes(top(n=25,

sort="count(*) desc",

nodes(baskets,

random(baskets, q="productID:productA", fl="basketID", rows="250"),

walk="basketID->basketID",

gather="productID",

fq="-productID:productA",

count(*))))

In expression above the top function emits the top 25 products based on the co-occurrence count. The top 25 products are then scored by the scoreNodes function.

The scoreNodes function scores the products based on the raw co-occurrence counts and their frequency across the entire collection.

The scoring formula is similar to the tf*idf scoring algorithm used to score results from a full text search. In the full text context tf (term frequency) is the number of times the term appears in the document. idf (inverse document frequency) is computed based on the document frequency of the term, or how many documents the term appears in. The idf is used to provide a boost to rarer terms.

scoreNodes uses the same principal to score nodes in a graph traversal. The count(*) aggregation is used as the tf value in the formula. The idf is computed for each node, in this case productID, based on global statistics across the entire collection. The effect of the scoreNodes algorithm is to provide a boost to nodes that are rarer in the collection.

The scoreNodes functions adds a field to each node tuple called nodeScore, which is the relevance score for the node.

Now we know which products have the most significant relationship with productA.

Can We Still Do Better?

Yes. We now know which products have the most significant relationship with productA. But we don't know if the user will have an interest in the product(s) we're recommending. In the next blog in the series we'll explore a graph expression that uses connections in the graph to personalize the recommendation.