The analyze Stream Evaluator

One of the features added in Solr 6.5 was Stream Evaluators. Stream Evaluators perform operations on Tuples in the stream. There are already a rich set of math and boolean Stream Evaluators in Solr 6.5 and more coming in Solr 6.6. The math and boolean Stream Evaluators allow you to build complex boolean logic and mathematical formulas on Tuples in the stream.

Solr 6.6 also has a new Stream Evaluator, called analyze, that works with text. The analyze evaluator applies a Lucene/Solr analyzer to a text field in the Tuples and returns a list of tokens produced by the analyzer. The tokens can then by used to annotate Tuples or streamed out as Tuples. We'll show examples of both approaches later in the blog.

But it's useful to talk about the power behind Lucene/Solr analyzers first. Lucene/Solr has a large set of analyzers that tokenize different languages and apply filters that transform the token stream. The "analyzer chain" design allows you to chain tokenizers and filters together to perform very powerful text transformations and extractions.

The analysis chain also provides a pluggable API for adding new NLP tokenizers and filters to Solr. New tokenizers and filters can be added and then layered with existing tokenizers and filters in interesting ways. New NLP analysis chains can then be used both during indexing and with Streaming NLP.

The cartesianProduct Streaming Expression

The cartesianProduct Streaming Expression is also new in Solr 6.6. The cartesianProduct expression emits a stream of Tuples from a single Tuple by creating a cartesian product from a multi-valued field or a text field. The analyze Stream Evaluator is used with the cartesianProduct Streaming Expression to create a cartesian product from a text field.

Here is a very simple example:

For this example we have indexed a single record in Solr with an id and text field called body:

id: 1

body: "c d e f g"

The following expression will create a cartesian product from this Tuple:

cartesianProduct(search(collection, q="id:1", fl="id, body", sort="id desc"),

analyze(body, analyzerField) as outField)

First let's look at what this expression is doing then look at the output.

The cartesianProduct expression is wrapping a search expression and an analyze Stream Evaluator. The cartesianProduct expression reads the Tuples returned by the search expression and applies the analyze Stream Evaluator to each Tuple. (Note that the cartesianProduct expression can read Tuples from any Streaming Expression.)

The analyze Stream Evaluator is taking the text from the body field in the Tuple and is applying an analyzer found in the schema which is pointed to by the analyzerField parameter.

The cartesianProduct function emits a single Tuple for each token produced by the analyzer. For example if we have a basic white space tokenizing analyzer the Tuples emitted would be:

id: 1

outField: c

id: 1

outField: d

id: 1

outField: e

id: 1

outField: f

id: 1

outField: g

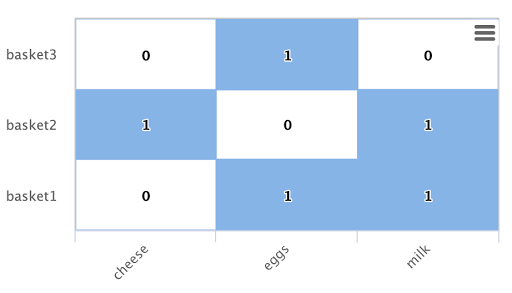

Creating Entity Graphs

The Tuples emitted by the cartesianProduct and the analyze evaluator can be saved to another Solr Cloud collection with the update stream. This allows you to build graphs from extracted entities that can then be walked with Solr Graph Expressions.

Annotating Tuples

The analyze Stream Evaluator can also be used with the select Streaming Expression to annotate Tuples with tokens extracted by an analyzer. Here is the sample syntax:

select(search(collection, q="id:1", fl="id, body", sort="id desc"),

id,

analyze(body, analyzerField) as outField)

This will add a field to each Tuple which will contain the list of Tuples extracted by the analyzer. The update function can be used to save the annotated Tuples to another Solr Cloud collection.

Scaling Up

Solr's parallel batch and executor framework can be used to apply a massive amount of computing power to perform NLP on extremely large data sets. You can read about the parallel batch and the executor framework in these blogs: